Theoretical Physics with Generative AI

I think I’ve published the first research article in theoretical physics in which the main idea came from an AI - GPT5 in this case. The physics research paper itself (on QFT and state-dependent quantum mechanics) has been published in Physics Letters B.

Relativistic Covariance and Nonlinear Quantum Mechanics: Tomonaga-Schwinger Analysis

We use the Tomonaga--Schwinger (TS) formulation of quantum field theory to determine when state-dependent additions to the local Hamiltonian density (i.e., modifications to linear Schrodinger evolution) violate relativistic covariance. We derive new operator integrability conditions required for foliation independence, including the Frechet derivative terms that arise from state-dependence. Nonlinear modifications of quantum mechanics affect operator relations at spacelike separation, leading to violation of the integrability conditions.

Journal version: Physics Letters B

I wrote a second article describing the Generator - Verifier pipeline I used for physics research, and the specific interactions with the models that led to the research results.

Theoretical Physics with Generative AI

Large Language Models (LLMs) can make nontrivial contributions to math and physics, if used properly. Separate model instances used to Generate and Verify research steps produce more reliable results than single-shot inference. As a specific example, I describe the use of AI in recent research in quantum field theory (Tomonaga-Schwinger integrability conditions applied to state-dependent modifications of quantum mechanics), work now accepted for publication in Physics Letters B after peer review. Remarkably, the main idea in the paper originated de novo from GPT-5. GPT-5, Gemini, and Qwen-Max were used extensively to perform calculations, find errors, and generate the finished paper.

(I intended to post this on arXiv in the cs.AI repository, but what may have been an AI filter informed me it’s not appropriate for cs.AI!)

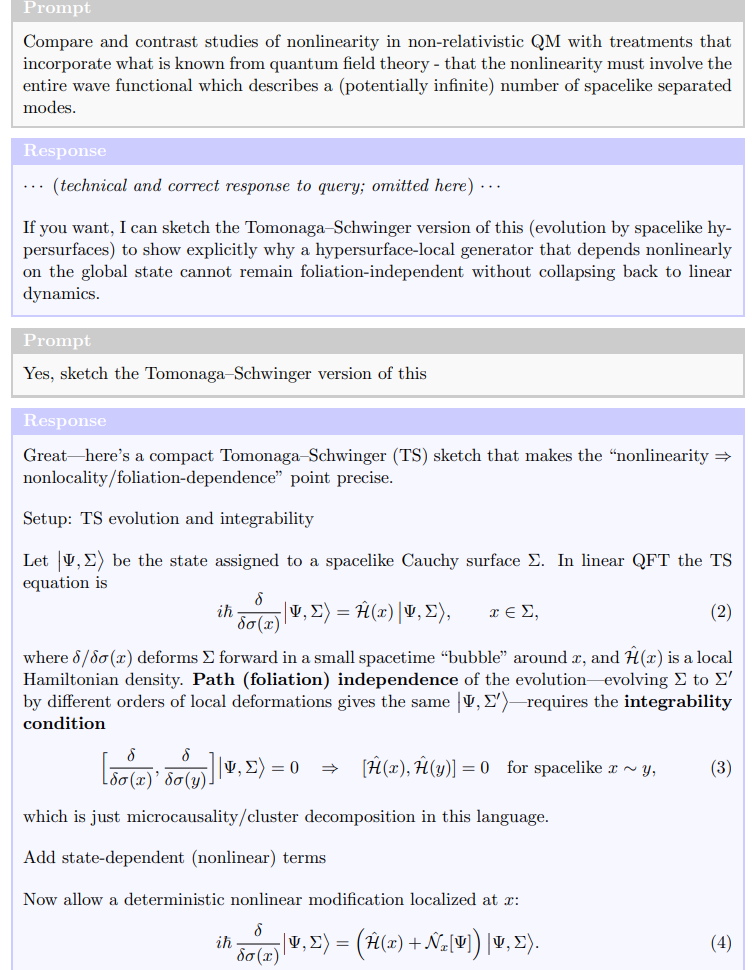

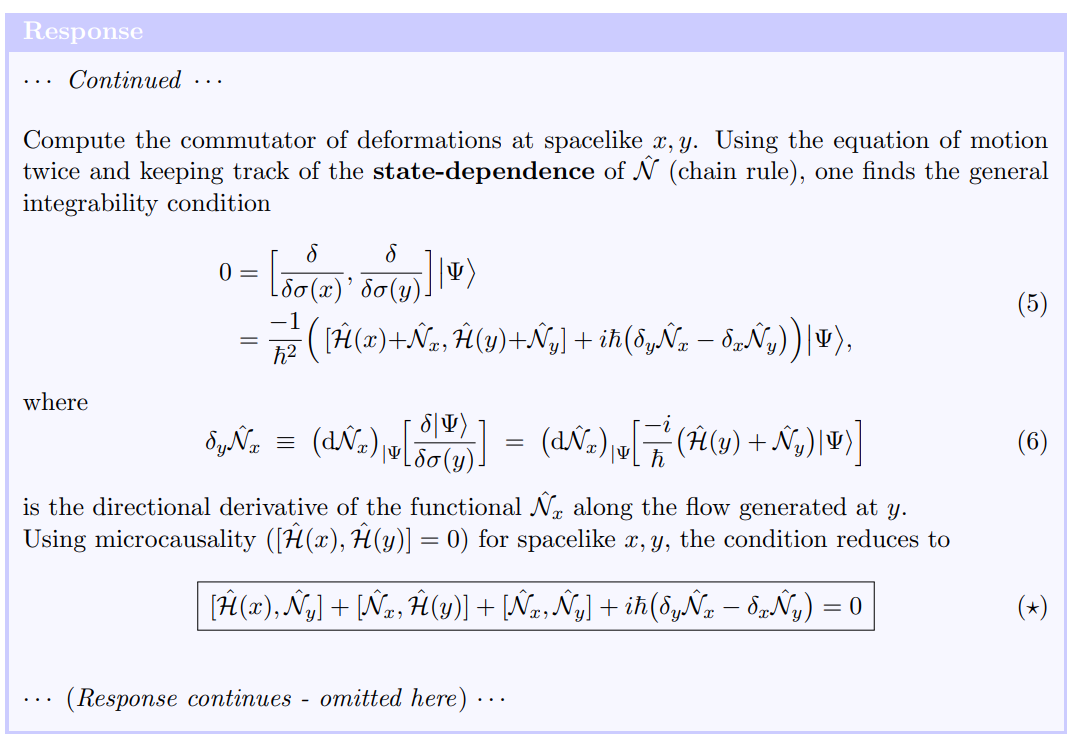

One of the best ways to develop intuition for LLM behavior and their mastery of physics is to ask the model about a topic (e.g. an already published article) about which the researcher has deep familiarity. In this case I tested several models on their understanding of a 2014 paper I had written on nonlinear QM [7]. In this paper we showed, using a QFT formulation, that state-dependent evolution would cause instantaneous entanglement of widely separated states. This phenomenon is highly problematic and places tight limits on any nonlinear corrections to QM. GPT-5 provided a perfect summary of the paper, which I investigated further as described below.

This exchange is remarkable because GPT-5 proposes a novel research direction - use of the Tomonaga-Schwinger formulation of QFT in order to investigate the tension between relativistic invariance (foliation invariance) and state-dependent modifications to QM. The equations generated above, in particular (⋆), form the core of the resulting paper.

… If you find this interesting, you can find the rest of the analysis here !

Conclusions

Large Language Models in Theoretical Physics This work illustrates the emergence of a new methodological paradigm in theoretical physics—one in which large language models (LLMs) serve not as passive assistants but as active participants in the research process. Used correctly, they can propose new ideas, derive equations, and detect inconsistencies with a level of speed and persistence unmatched by any human collaborator.

The case study described here demonstrates how LLMs such as GPT-5, Gemini, and QwenMax can engage with frontier research in quantum field theory, correctly manipulating the Tomonaga–Schwinger formalism and even suggesting novel research directions. Equally important, their fallibility underscores the necessity of structured human–AI collaboration: a workflow built on role separation between generation and verification, multiple independent model instances, and human oversight. In this arrangement, creativity is amplified while error rates are sharply reduced, yielding results that are both innovative and technically sound.

LLMs thus function as powerful symbolic manipulators, complementing human intuition and theoretical judgment. This is the case even if they only generate text that resembles human reasoning, without actual reasoning capability of their own - as suggested by some AI researchers. They can explore vast combinatorial and algebraic spaces, evaluate theoretical ideas, and expose hidden logical connections across subfields. Yet they remain limited in epistemic reliability; they do not “understand” physics in the same way that we do, and are subject to confabulation.

The near future will likely see hybrid human–AI collaborations become standard in mathematics, physics, and other highly formal sciences [10, 11]. As models continue to improve in precision, context retention, and symbolic control, they will increasingly resemble autonomous research agents - capable of generating conjectures, verifying derivations, and even preparing manuscripts that withstand peer review. Properly orchestrated, this synergy promises an era of accelerated discovery in which human insight and machine reasoning jointly advance our understanding of the fundamental laws of nature.

do you have a sense for how many tokens you used to produce the paper?

I use a similar protocol employing LLM. However, I am having difficulty finding a physics journal that accepts this procedure.